Kubernetes GKE setup (Batteries included)

From Terraform setup to Cluster autoscaler, wordpress app with HPA configured, ingress controller, monitoring, loadtesting

Things to look for

this blog post is a continuation of

what all things to consider before we can start with the configurations

Our main goal of creating this blog is to get an idea how can we configure Kubernetes managed cluster with our desired application, which is highly available with Horizontal pod Autoscale, health checks for wordpress.

It contains some optimizations for wordpress as well but should not be replicated in your own production env

it will provision infra via Terraform / OpenTofu

Cluster is capable of autoscale

manifests for deploying yourself

We will be provisioning GKE cluster (where wordpress, ingress controller will be running), Google SQL for wordpress

Make sure you have gcloud configured to that particular project and the specific APIs are enabled!

Infrastructure part

Terraform

provider that we are going to use

### providers.tf

terraform {

required_providers {

local = {

source = "hashicorp/local"

version = "~> 2.5"

}

google = {

source = "hashicorp/google"

version = "~> 5.23"

}

null = {

source = "hashicorp/null"

version = "= 3.2.2"

}

random = {

source = "hashicorp/random"

version = "~> 3.6"

}

}

}

provider "google" {

credentials = file(var.google_credentials_file)

project = var.project_id

region = var.region

}

provider "local" {

}

provider "null" {

}

provider "random" {

}

Variables we are going to use change according to your customizations

### vars.tf

variable "project_id" {

description = "Your Google Cloud project ID"

type = string

}

variable "google_credentials_file" {

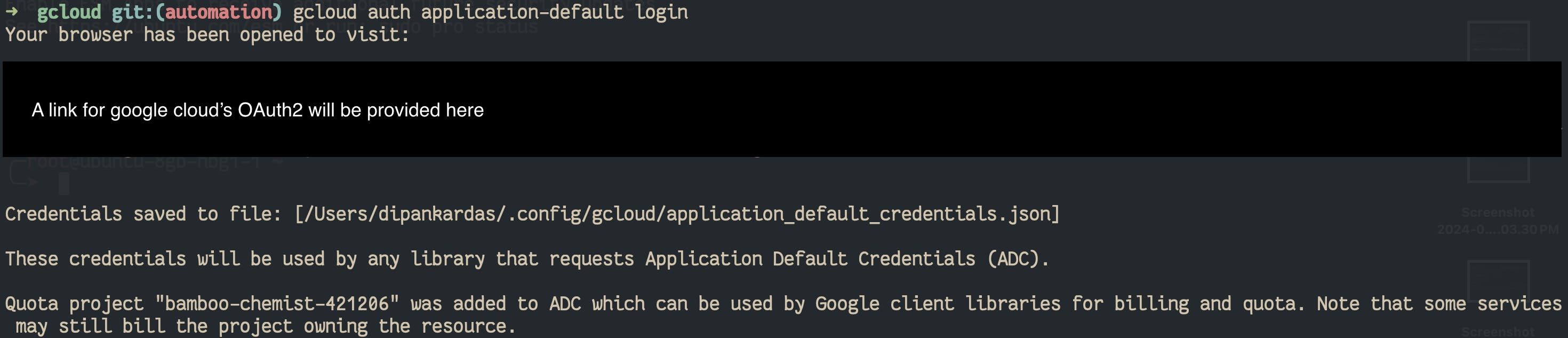

description = "google credentials file execute $ gcloud auth application-default login"

default = "/Users/dipankardas/.config/gcloud/application_default_credentials.json"

}

variable "label_node_pool" {

type = map(string)

default = {

environment = "dev"

type = "init-custom"

}

}

variable "region" {

description = "Google Cloud region where resources will be deployed"

default = "asia-south1"

type = string

}

variable "cluster_name" {

description = "Name of the GKE cluster"

default = "wordpress-k8s"

type = string

}

variable "node_machine_type" {

description = "Machine type for GKE nodes"

default = "e2-medium"

type = string

}

variable "node_disk_size_gb" {

description = "Disk size for GKE nodes (in GB)"

default = 30

type = number

}

variable "max_cluster_mem_limit" {

description = "maximunm number of memory for the entire cluster"

default = 64

type = number

}

variable "min_cluster_mem_limit" {

description = "maximunm number of memory for the entire cluster"

default = 8

type = number

}

variable "max_cluster_cpu_limit" {

description = "maximunm number of memory for the entire cluster"

default = 32

type = number

}

variable "min_cluster_cpu_limit" {

description = "maximunm number of memory for the entire cluster"

default = 4

type = number

}

variable "sql_instance_name" {

description = "Name of the Cloud SQL instance"

default = "wordpress-sql-instance"

type = string

}

variable "sql_tier" {

description = "Tier for the Cloud SQL instance"

default = "db-f1-micro"

}

variable "sql_db" {

description = "sql database name"

default = "wordpress"

}

variable "sql_user" {

description = "sql username"

default = "wordpress"

}

variable "sql_iam_name" {

default = "cloudsql-proxy"

}

variable "k8s_ns" {

default = "demo"

}

variable "k8s_certmanager_version" {

default = "1.14.3"

}

variable "k8s_nginx_ingress_version" {

default = "1.10.0"

}

Main terraform resources to provision the cluster as well as the terr

####### main.tf

resource "google_container_node_pool" "primary_preemptible_nodes" {

name = "custom-pool"

cluster = google_container_cluster.my_cluster.id

location = var.region

initial_node_count = 1 # NOTE: its no of nodes per zone

node_config {

preemptible = true

machine_type = var.node_machine_type

disk_size_gb = var.node_disk_size_gb

oauth_scopes = [

"https://www.googleapis.com/auth/cloud-platform",

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring"

]

labels = var.label_node_pool

}

autoscaling {

total_max_node_count = 6

total_min_node_count = 3

}

management {

auto_repair = true

auto_upgrade = true

}

provisioner "local-exec" {

command = "gcloud container clusters get-credentials ${var.cluster_name} --project ${var.project_id} --region ${var.region} && kubectl cluster-info"

interpreter = ["/bin/zsh", "-c"]

working_dir = path.module

}

}

resource "null_resource" "enable_api" {

provisioner "local-exec" {

command = "gcloud services enable container.googleapis.com sqladmin.googleapis.com serviceusage.googleapis.com"

interpreter = ["/bin/zsh", "-c"]

working_dir = path.module

}

}

resource "google_container_cluster" "my_cluster" {

depends_on = [

null_resource.enable_api

]

name = var.cluster_name

location = var.region

deletion_protection = false

# datapath_provider = "ADVANCED_DATAPATH"

# NOTE We can't create a cluster with no node pool defined, but we want to only use

# separately managed node pools. So we create the smallest possible default

# node pool and immediately delete it.

initial_node_count = 1 # NOTE: its no of nodes per zone

remove_default_node_pool = true

cluster_autoscaling {

enabled = true

resource_limits {

resource_type = "cpu"

maximum = var.max_cluster_cpu_limit

minimum = var.min_cluster_cpu_limit

}

resource_limits {

resource_type = "memory"

maximum = var.max_cluster_mem_limit

minimum = var.min_cluster_mem_limit

}

auto_provisioning_defaults {

disk_size = var.node_disk_size_gb

oauth_scopes = [

"https://www.googleapis.com/auth/cloud-platform",

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring"

]

}

}

vertical_pod_autoscaling {

enabled = true

}

addons_config {

horizontal_pod_autoscaling {

disabled = false

}

}

}

resource "google_sql_database" "database_deletion_policy" {

name = var.sql_db

instance = google_sql_database_instance.my_sql_instance.name

deletion_policy = "ABANDON"

}

resource "random_password" "password" {

length = 16

special = true

override_special = "!#$%&*()-_=+[]{}<>:?"

}

resource "google_sql_user" "users" {

name = var.sql_user

instance = google_sql_database_instance.my_sql_instance.name

host = "%"

password = random_password.password.result

}

resource "google_sql_database_instance" "my_sql_instance" {

depends_on = [

null_resource.enable_api

]

name = var.sql_instance_name

database_version = "MYSQL_8_0"

region = var.region

deletion_protection = "false"

settings {

tier = var.sql_tier

}

}

resource "google_service_account" "my_service_account" {

depends_on = [

google_container_node_pool.primary_preemptible_nodes

]

account_id = var.sql_iam_name

display_name = var.sql_iam_name

}

resource "google_project_iam_binding" "cloudsql_binding" {

project = var.project_id

role = "roles/cloudsql.client"

members = [

"serviceAccount:${google_service_account.my_service_account.email}",

]

}

resource "google_service_account_key" "my_key" {

service_account_id = google_service_account.my_service_account.name

}

resource "local_file" "myaccountjson" {

content = base64decode(google_service_account_key.my_key.private_key)

filename = "key.json"

depends_on = [

google_sql_database.database_deletion_policy,

google_sql_user.users

]

provisioner "local-exec" {

environment = {

"NAMESPACE" = "${var.k8s_ns}"

"SQL_USR" = "${var.sql_user}"

"SQL_PASS" = random_password.password.result

"CERTMANAGER_VER" = "${var.k8s_certmanager_version}"

"NGINXINGRESS_VER" = "${var.k8s_nginx_ingress_version}"

}

command = "./post-install.sh"

interpreter = ["/bin/bash", "-c"]

working_dir = path.module

}

}

output "sql_connection_name" {

value = google_sql_database_instance.my_sql_instance.connection_name

}

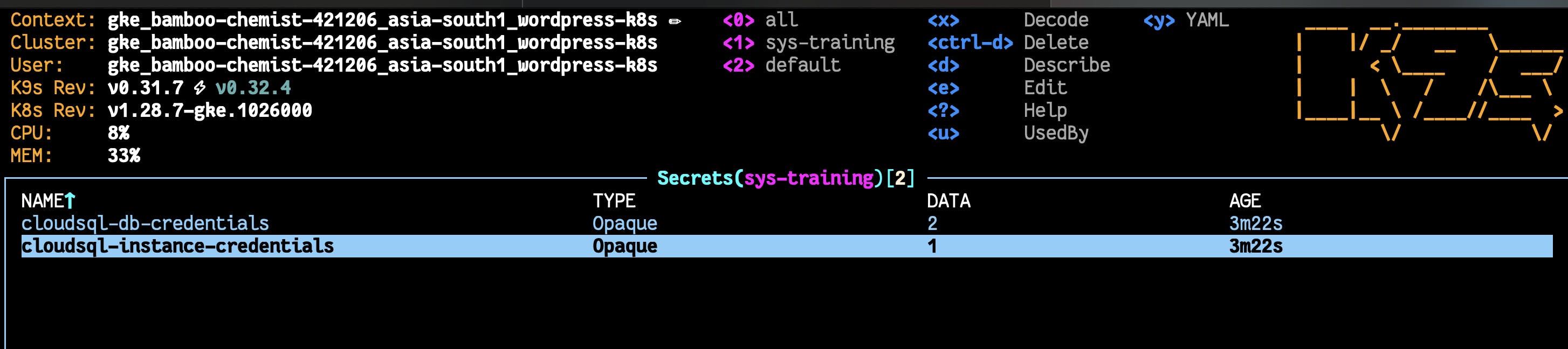

Create a Script with the post-install.sh

#!/bin/bash

PS4='+\[\033[0;33m\](\[\033[0;36m\]${BASH_SOURCE##*/}:${LINENO}\[\033[0;33m\])\[\033[0m\] '

set -xe

# create the namespace where our workload is going to be deployed

kubectl create ns $NAMESPACE || echo "already created namespace"

# create the secrets for wordpress to work

kubectl delete secret cloudsql-db-credentials --namespace=$NAMESPACE || echo "cloudsql-db-credentials not there"

kubectl create secret generic cloudsql-db-credentials --from-literal=username=$SQL_USR --from-literal=password=$SQL_PASS --namespace=$NAMESPACE

kubectl delete secret cloudsql-instance-credentials --namespace=$NAMESPACE || echo "cloudsql-instance-credentials not there"

kubectl create secret generic cloudsql-instance-credentials --from-file key.json --namespace=$NAMESPACE

# install certmanager and nginx controller for HTTPS and ingress

helm repo add cert-manager https://charts.jetstack.io

kubectl create ns cert-manager || echo "already created namespace"

helm install my-cert-manager cert-manager/cert-manager --version $CERTMANAGER_VER --set installCRDs=true --set global.leaderElection.namespace=cert-manager || echo "already there"

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v$NGINXINGRESS_VER/deploy/static/provider/cloud/deploy.yaml

#!/bin/bash

gcloud init

gcloud auth application-default login

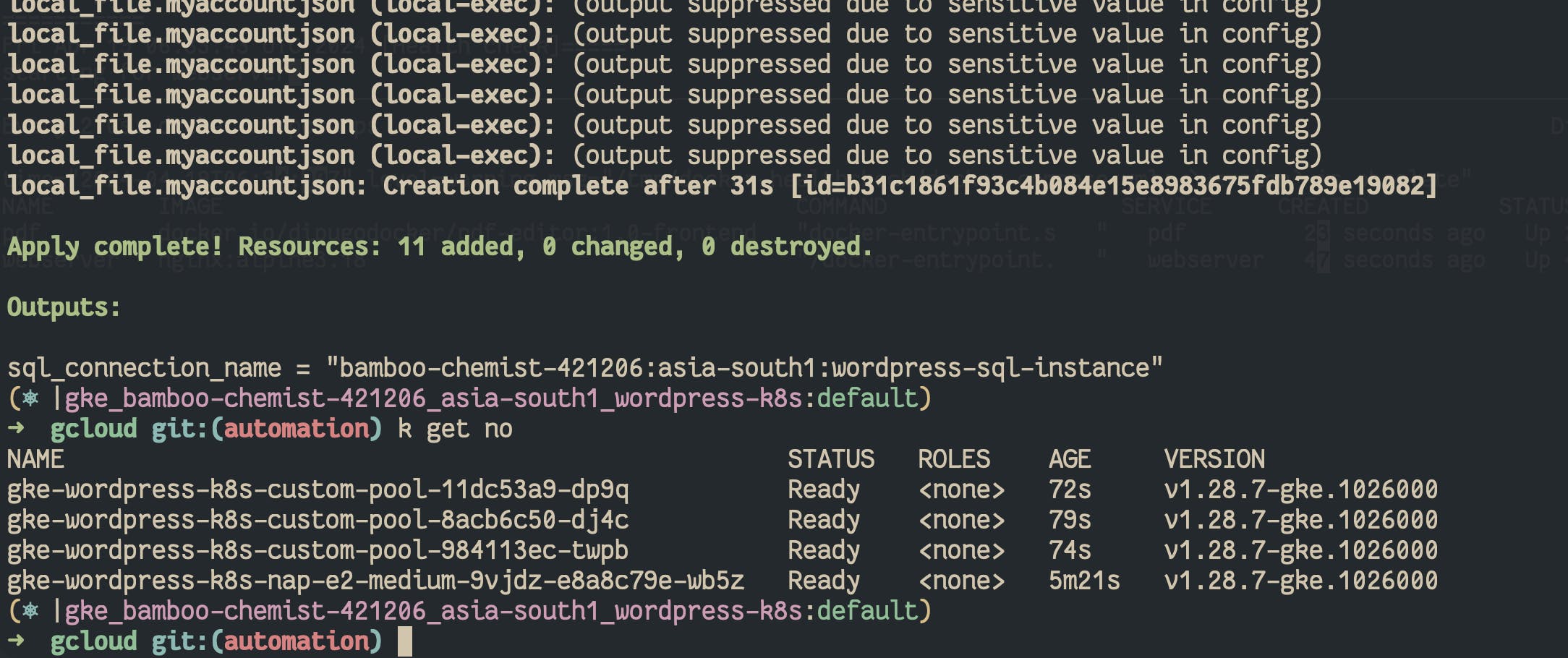

Once these things are done you can run terraform module and apply

export TF_VAR_project_id="<project>"

tf init

tf plan

tf apply

tf destroy # use this to remove resources

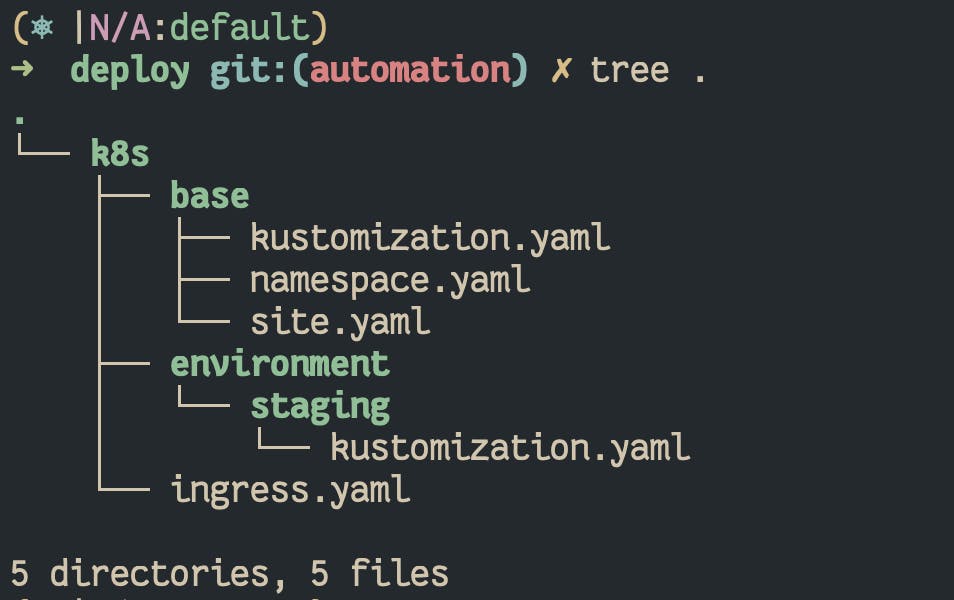

Kubernetes deployment for wordpress

this is the folder structure

ingress.yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: kubeissuer-wp

namespace: demo

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: <>

privateKeySecretRef:

name: kubeissuer-wp

solvers:

- http01:

ingress:

class: nginx

---

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

namespace: demo

name: kubecert-wp

spec:

secretName: tls-wp

issuerRef:

name: kubeissuer-wp

kind: ClusterIssuer

commonName: <>

dnsNames:

- <>

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: kubeissuer-wp

kubernetes.io/ingress.class: nginx

namespace: demo

name: kube-certs-ingress

spec:

ingressClassName: nginx

tls:

- hosts:

- <>

secretName: tls-wp

rules:

- host: <>

http:

paths:

- backend:

service:

name: wordpress

port:

number: 80

path: /

pathType: Prefix

environment/staging/kustomization.yaml

# Only chalenge which is not addressed

# is how to configure the no of replicas if using gitops tool like argocd

# other than that you configure the newTag with your gitops pipeline

# using j2 templating

namespace: demo

replicas:

- name: wordpress

count: 1

images:

- newName: ghcr.io/<>

name: placeholder-base-image

newTag: 66d9bf1

resources:

- ../../base

patches:

- target:

group: apps

version: v1

kind: Deployment

name: wordpress

patch: |-

- op: replace

path: /spec/template/spec/containers/2/command

value:

- /cloud_sql_proxy

- -instances=<GET YOUR MYSQL INSTANCE CONNECTION NAME FROM OUTPUT OF TERRAFORM APPLY>=tcp:3306

- -credential_file=/secrets/cloudsql/key.json

Next is the big file

base/site.yaml

images[0].newNameYou can configure the resource limits for the php

---

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: wordpress-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: wordpress

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

- type: Resource

resource:

name: memory

target:

type: AverageValue

averageValue: 350Mi

---

apiVersion: v1

kind: ConfigMap

metadata:

name: fpm-conf

data:

www.conf: |

[www]

user = www-data

group = www-data

listen = 127.0.0.1:9000

listen.backlog = 65535

pm = dynamic

pm.max_children = 205

pm.start_servers = 16

pm.min_spare_servers = 16

pm.max_spare_servers = 32

pm.max_requests = 500

ping.path = /healthz

ping.response = ok

request_terminate_timeout = 300

php_admin_value[memory_limit] = 2048M

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-conf

data:

default.conf: |

server {

server_name _;

index index.php index.html index.htm;

root /var/www/html;

location / {

try_files $uri $uri/ /index.php$is_args$args;

}

location ~ ^/(healthz)$ {

include fastcgi_params;

fastcgi_pass 127.0.0.1:9000;

fastcgi_param SCRIPT_FILENAME $fastcgi_script_name;

}

location ~ \.php$ {

try_files $uri =404;

fastcgi_split_path_info ^(.+\.php)(/.+)$;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_param PATH_INFO $fastcgi_path_info;

}

location ~ /\.ht {

deny all;

}

location = /favicon.ico {

log_not_found off; access_log off;

}

location = /robots.txt {

log_not_found off; access_log off; allow all;

}

location ~* \.(css|gif|ico|jpeg|jpg|js|png)$ {

expires max;

log_not_found off;

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress

spec:

selector:

matchLabels:

app: wordpress

template:

metadata:

labels:

app: wordpress

spec:

volumes:

- name: share-volumes

emptyDir: {}

- configMap:

name: fpm-conf

name: fpm-conf

- configMap:

name: nginx-conf

name: config

- name: cloudsql-instance-credentials

secret:

defaultMode: 420

secretName: cloudsql-instance-credentials

initContainers:

- name: volume-format

image: placeholder-base-image

command: ["cp", "-rv", "/var/www/html/.", "/mnt"]

volumeMounts:

- name: share-volumes

mountPath: /mnt

containers:

- name: wordpress

image: placeholder-base-image

resources:

limits:

memory: "300Mi"

cpu: "500m"

volumeMounts:

- name: share-volumes

mountPath: /var/www/html

- mountPath: /usr/local/etc/php-fpm.d/www.conf

name: fpm-conf

subPath: www.conf

readinessProbe:

tcpSocket:

port: 9000

initialDelaySeconds: 15

periodSeconds: 10

livenessProbe:

tcpSocket:

port: 9000

initialDelaySeconds: 15

periodSeconds: 10

env:

- name: WORDPRESS_DB_HOST

value: 127.0.0.1:3306

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef:

key: username

name: cloudsql-db-credentials

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

key: password

name: cloudsql-db-credentials

ports:

- containerPort: 9000

name: fpm

- name: nginx

image: nginx:alpine

resources:

limits:

memory: "100Mi"

cpu: "50m"

volumeMounts:

- mountPath: /etc/nginx/conf.d/default.conf

name: config

subPath: default.conf

- name: share-volumes

mountPath: /var/www/html

livenessProbe:

httpGet:

path: "/healthz"

port: 80

httpHeaders:

- name: Host

value: localhost

initialDelaySeconds: 3

periodSeconds: 3

readinessProbe:

tcpSocket:

port: 80

initialDelaySeconds: 15

periodSeconds: 10

ports:

- containerPort: 80

name: web

- name: cloudsql-proxy

command:

- /cloud_sql_proxy

- -instances=INSTANCE_CONNECTION_NAME=tcp:3306

- -credential_file=/secrets/cloudsql/key.json

image: gcr.io/cloudsql-docker/gce-proxy:1.33.2

imagePullPolicy: IfNotPresent

resources:

limits:

memory: "100Mi"

cpu: "100m"

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- NET_RAW

runAsUser: 2

volumeMounts:

- mountPath: /secrets/cloudsql

name: cloudsql-instance-credentials

readOnly: true

---

apiVersion: v1

kind: Service

metadata:

name: wordpress

spec:

selector:

app: wordpress

ports:

- port: 80

name: web

- port: 9000

name: fpm

base/kustomization.yaml

resources:

# - namespace.yaml

- site.yaml

to apply the kustomize apply

kubectl apply -k environment/staging/

# for the ingress thing you first need to grab the ExternalIp from the

# service type loadbabalcer of the nginx ingress controller deployed!

# and set the DNS A record

# modify the ingress.yaml

kubectl apply -f ingress.yaml

From here you can head over to your Domain to complete the setup for the wordpress

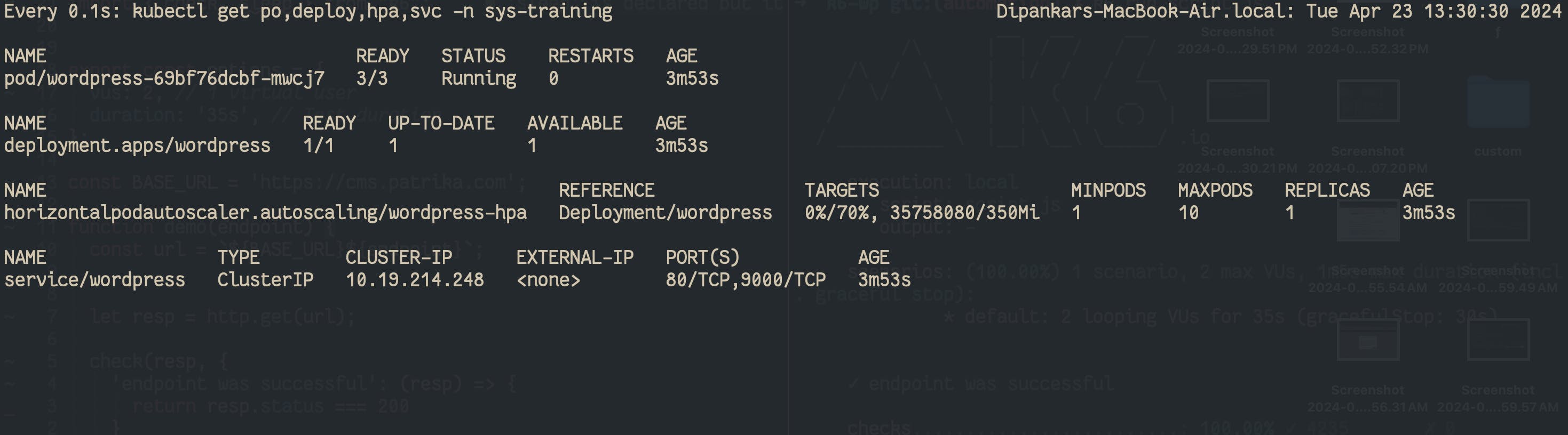

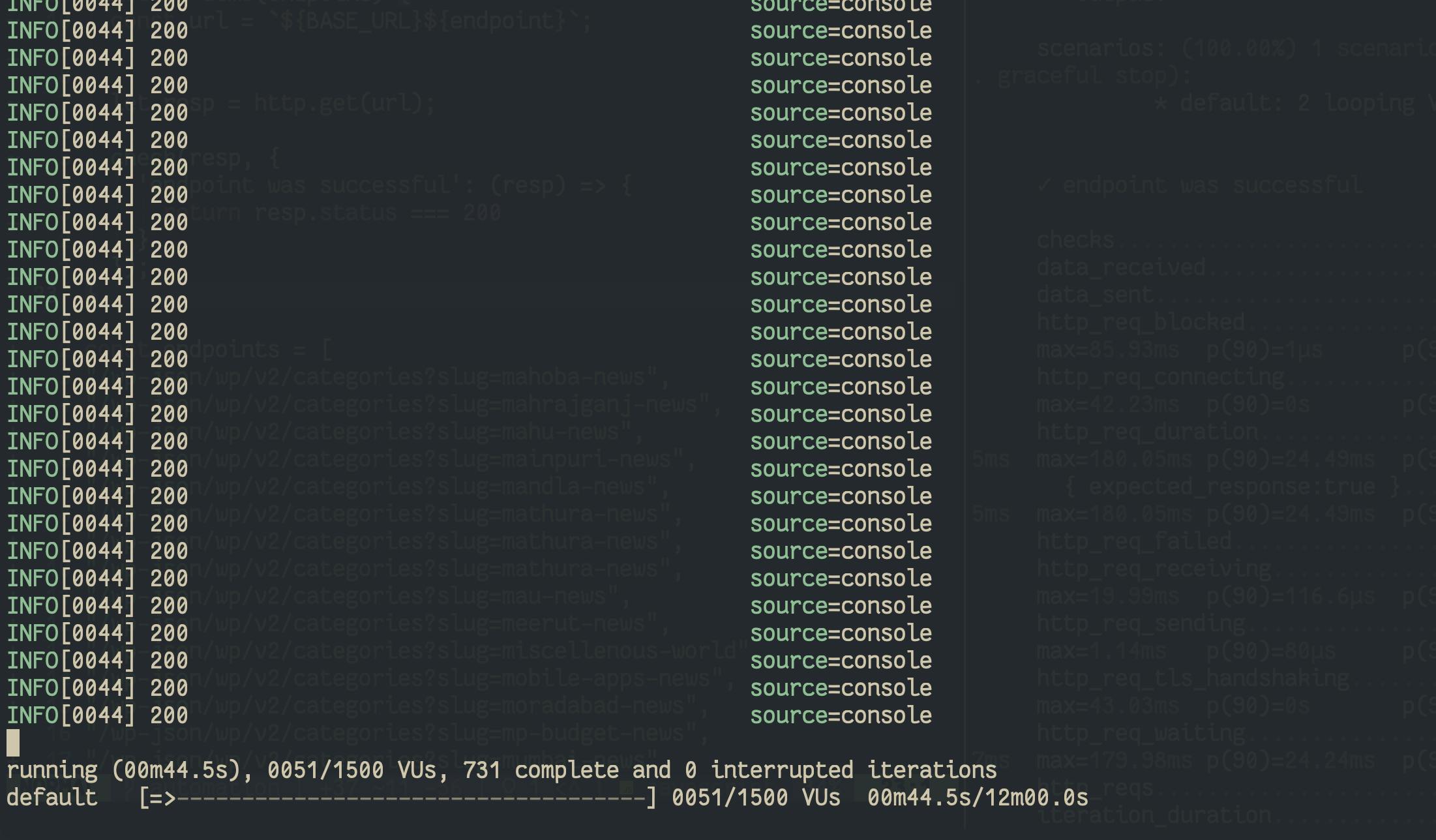

Lets do LoadTesting to check the power of HPA

for that we will be using k6

import http from "k6/http";

import { check, sleep } from "k6";

export const options = {

stages: [

{ duration: "30s", target: 20 },

{ duration: "1m", target: 150 },

{ duration: "5m", target: 500 },

{ duration: "10m", target: 1500 },

{ duration: "6m", target: 500 },

{ duration: "30s", target: 0 },

],

};

export default function () {

const res = http.get("https://<your domain>");

check(res, { "status was 200": (r) =>{

console.log(r.status)

return r.status == 200

}

});

sleep(1);

}

k6 run script.js

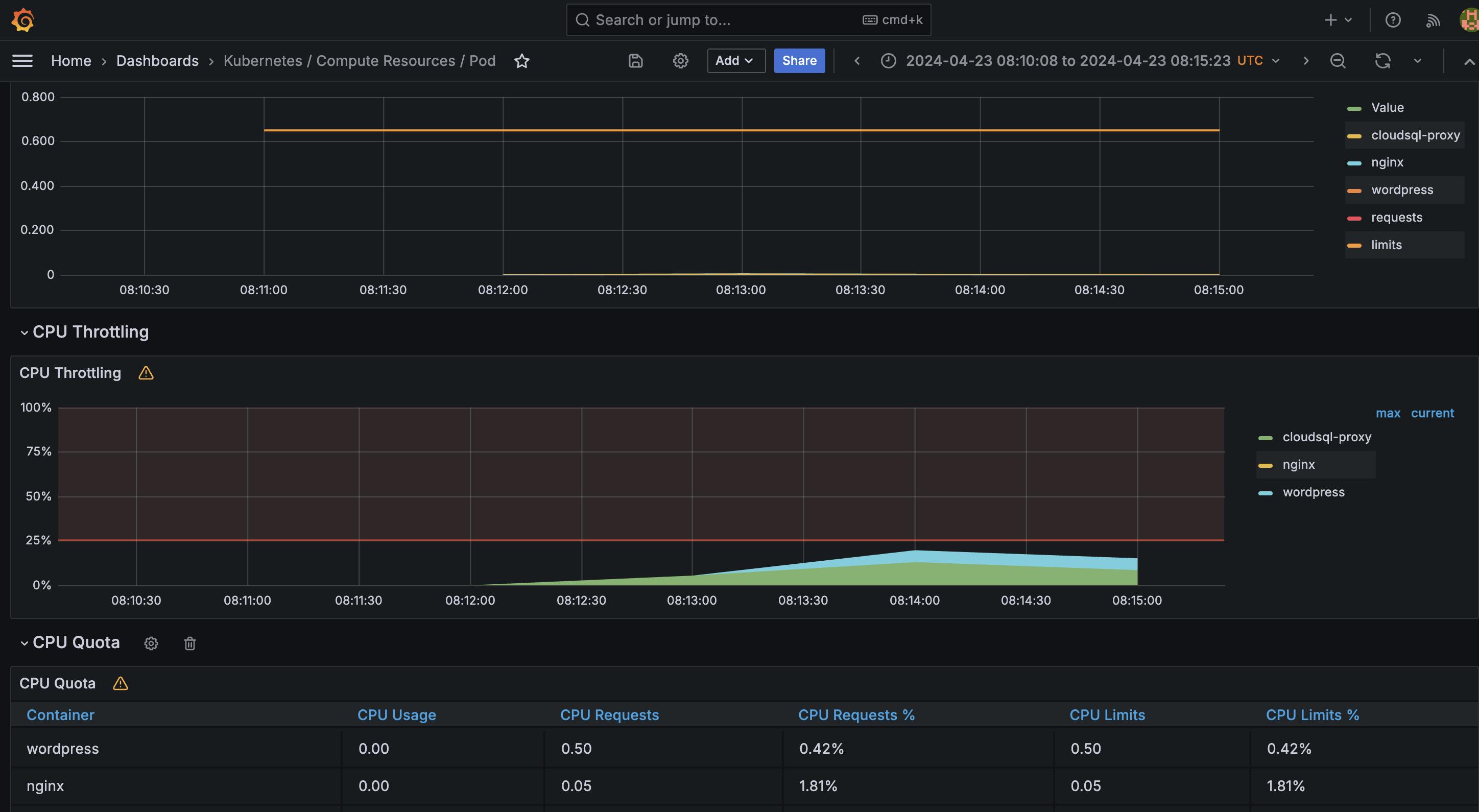

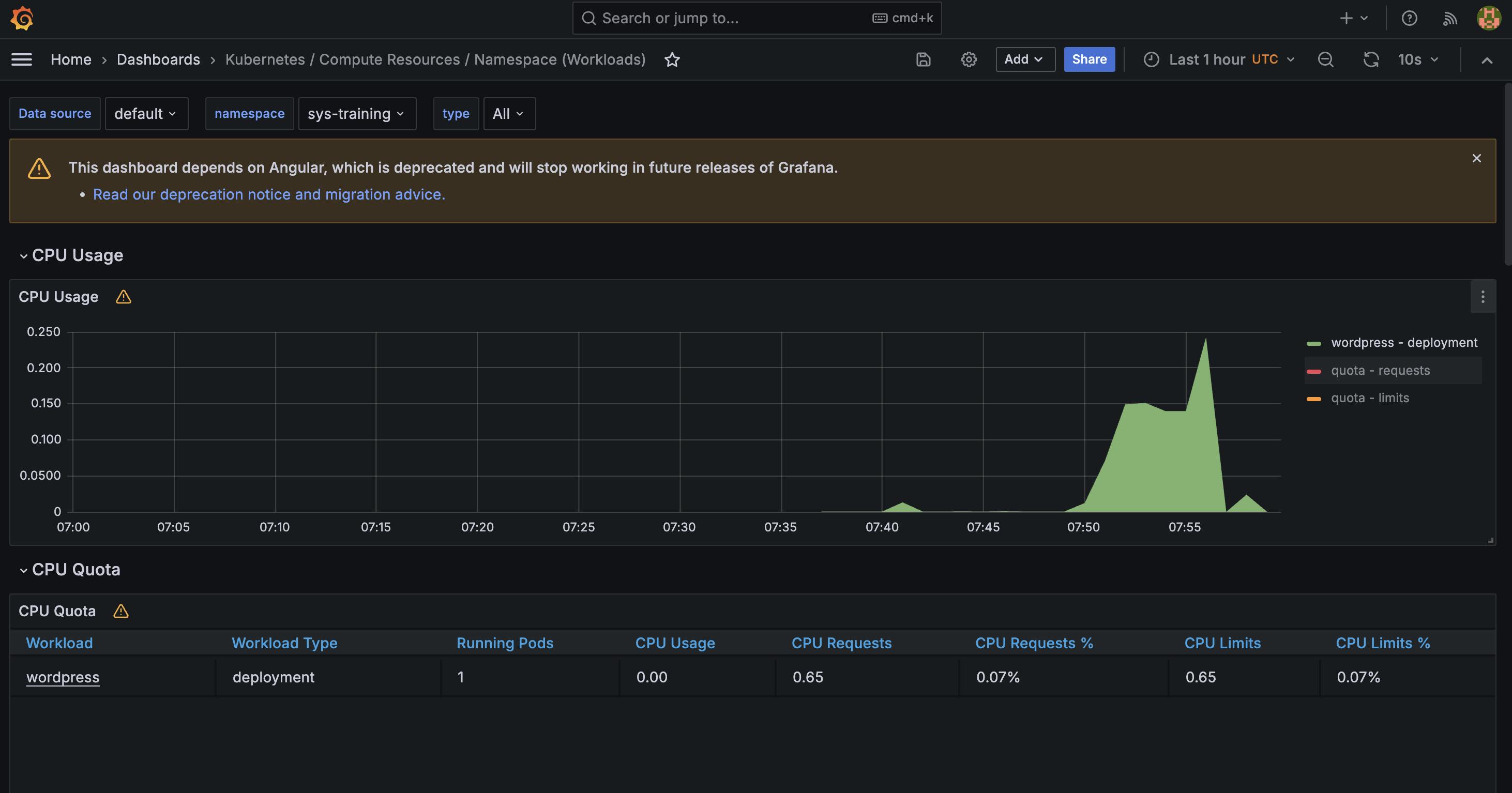

we can also see what all components of the pod had a lot of resource utilization

wordpress-fpm and the mysql got bottleneck due to a lot of traffic so you can adjust the limits and also choose a bigger Node size so that the cluster gets time to provision a new node and subsequent wordpress pod health check before it can serve the traffic (Its all small small optimizations)

For all the monitoring you can deploy kube-prometheus stack if you are getting started or new to monitoring

It install prometheus with grafana

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm install my-kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 57.2.0